3D modeling is now a common part of many fields – from films and video games to architecture and product design. One of the key steps in creating realistic 3D objects is texturing – the process of “applying” a surface to the model, including colors, materials, and details that give it a believable appearance. However, this step can be demanding, time-consuming, and requires significant artistic and technical skills. This is precisely where an AI tool called StableGen comes in, created as part of a bachelor’s thesis by Bc. Ondřej Sakala, a student at the Faculty of Information Technology of the Czech Technical University in Prague (FIT CTU).

StableGen is an extension for the popular 3D program Blender and allows users to easily generate detailed textures using so-called diffusion models – AI tools that can create images based on text prompts or visual references. This means a user can simply write that they want a texture of rusty metal or aged wood, and StableGen will generate it within moments.

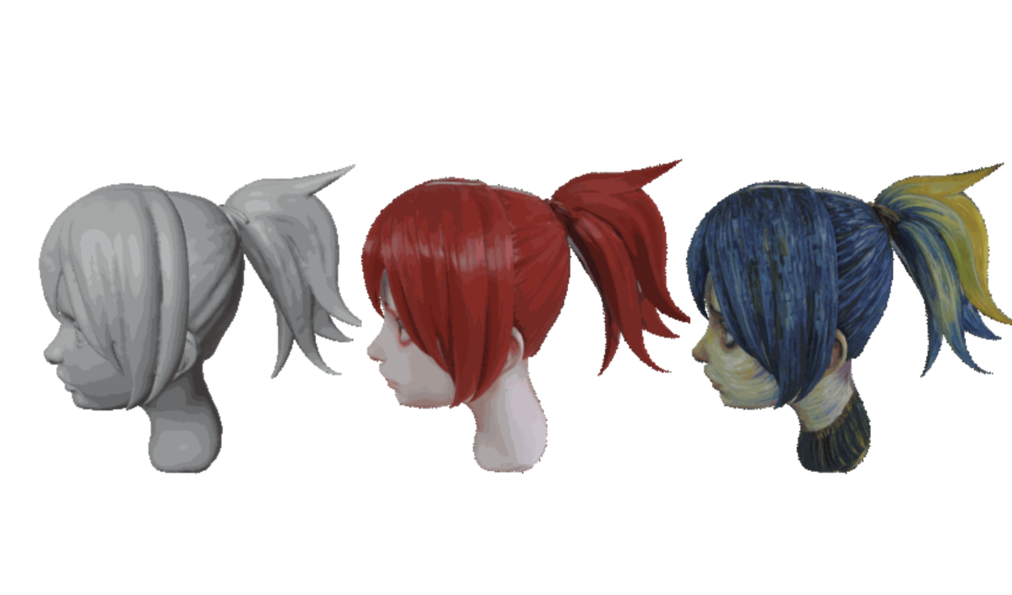

“One of the greatest advantages of this tool is that the textures are not just visually appealing, but they actually fit the 3D objects – even when the models are complex. That’s because the AI generates textures based on the geometry of the model or the entire scene,” says Ondřej.

StableGen also allows the use of image references, so if someone has a specific style or pattern, it can easily be transferred onto a 3D model. Additionally, users can adjust the level of detail or appearance as needed.

The entire project is open-source and freely available on GitHub, so anyone can try it out – whether they’re a professional 3D artist, a student, or an enthusiast modeling their own world at home.

“I plan to continue working on the tool. Several specific improvements are already in the pipeline, such as implementing even more advanced AI models or adding support for the Mac platform,” Ondřej adds.